This week was a special one for the QA team, automated browser tests were released earlier this week after months of working on them. I want to give a special thanks to our DevOps team, Miljenko, Darko and Lazar for all their help with setting up our testing environment!

My journey to QA

First I would like to give a short history version on how I ended up here.

It all started in December 2015 when the Development and Customer Happiness teams decided that they need someone in support to work on more difficult tasks than regular support, which called for a new Support Debugger position, where I started working. Mainly what I did here is debug more complicated issues before escalating it to developers in order to save their time, or if it was easy enough fix it myself (with code reviews of course).

The Support Debugging team grew in Summer 2016 when Nenad Conic joined me and paved the way for the QA team, which was needed at the time (this was before the Orion launch). We managed to create and become an independent team in October with Rados as our team leader.

QA testing process

Since we were new to this field, our first objective was to figure out how other QA departments work and to start building a foundation for our team. We quickly realized that no two QA departments are alike, so we decided to go with a solid mix of automated and manual tests.

Automated testing with Nightwatch.js

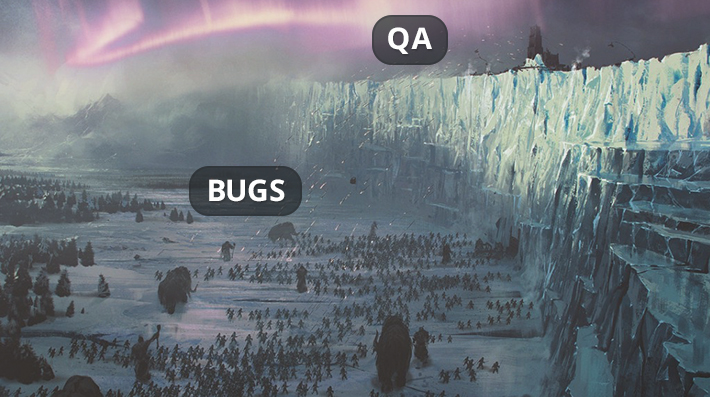

Bugs gather, and now my deploy begins.

If you are a Game of Thrones fan then you know that the name was inspired by Night’s Watch, an ancient order that protects the world of Westeros from the terrors of the North. Similar to what we do, but not as cool.

Other than the awesome name, we decided to go with Nightwatch.js because its compatibility with ManageWP. Nightwatch.js uses Node.js with Selenium/WebDriver, which are currently the best tools for browser testing. However, in ManageWP we use AngularJS, which had issues with regular Selenium and it didn’t work as intended. After some digging we found Nightwatch.js, which solved almost all issues we experienced with other tools and decided to stick with it.

Automated test issues

We were new to writing automated tests and made so many mistakes, but we also learned a lot in the past couple of months.

The first step was to learn to use this great tool and create accurate tests that won’t give false positives. Creating these tests proved to be more difficult then we anticipated: we had 3 major issues and ended up refactoring our whole code 2 times.

As a beginner I started writing tests and in them defining a lot of pauses (manually setting how long will the script wait before it continues). This was our first major issue: coding like this made all of our tests useless if ManageWP took a couple of more seconds to load. Writing tests with pauses caused tests to break unpredictably.

Luckily, Nightwatch.js had tools that helped us create custom commands that first wait for that element to be visible and then proceed with the test. With this we completely removed all of the pauses from the code and all of the tests started waiting for elements before actions.

Here we also made changes to script flows, now each script is like a user story, it goes through a flow and at the end it needs to reset the environment in order to prepare for the next script (i.e. deactivates the tool that was being tested).

The second issue was our tests being broken constantly due to ManageWP code being improved on a regular basis. Every time developers change something, we need to update our tests. Unfortunately, our scripts are mostly independent from one another and we had to fix them one by one.

The last major issue that we had was if a script failed, it would start the next one without resetting the environment and this becomes a domino effect with a lot of false positives.

Automated test solutions

In order to resolve these issues we decided to make three major changes:

- Add unique IDs in ManageWP dashboard that only our tests would be using; this way small changes won’t break our tests

- Centralize repeating code

- Tests for our tests

Centralizing all of our repeating code into custom commands helps us make a change only once.

It’s ironic, but in the end we needed to create tests that would check if the environment is first ready for testing and then allow the test to continue.

Fun fact: During the development, the tests detected some bugs which then our developers fixed.

Manual testing and quality advocates

Although we are very focused on covering the whole application in automatic tests, we still primarily focus on manual testing. We like to put ourselves in the shoes of our users. Almost everything that goes into production will go through our hands before being deployed to production.

One of the many charms of being in QA is a little bit of knowledge in all of the fields, being a “wearer of many hats”. Thanks to this we can understand developers and give useful feedback and suggestions that aren’t unrealistic and work together with other teams to create top quality features in the end.

That’s why, when doing manual testing, we consider ourselves more Quality Advocates than Quality Assurance, since we do more then just test the product.

There is a great article by Alister Scott on Quality Advocates where he wrote the following:

A Quality Advocate (QA) in an agile team advocates quality. Whilst their responsibilities include testing, they aren’t limited to just that. They work closely with other team members to build quality in.

I recommend reading his awesome blog post on Quality Advocates.

This was my short story on how the QA department was created. QA testers from around the world, I’m interested to know how do you test your product?

0 Comments